Tech

How AI Red Teaming Helps Organizations Detect And Defend Against Intelligent Threats

As businesses use artificial intelligence (AI) more and more in their daily operations, they are facing new and more difficult security problems. AI models can be changed in small ways that can lead to biased decisions, wrong classifications or even a complete system compromise. This is different from how traditional software works.

To protect against these risks, smart businesses are using AI red teaming, which is a proactive method that simulates smart attacks on AI systems to find weaknesses before hackers do.

AI red teaming helps businesses improve their defences, make sure their models behave ethically, and make AI security better overall by using the same methods that are used in cybersecurity penetration testing.

What is AI Red Teaming, and How is it Different from Traditional Security Testing?

Before we talk about the differences, it’s important to understand that AI red teaming goes beyond vulnerability scanning or penetration testing.

Regular security testing looks for weaknesses in infrastructure, applications and networks. These are places where threats take advantage of code, configurations or system flaws.

AI Red Teaming, on the other hand, tests the logic, data integrity, and behaviour of AI and machine learning models. It does so by putting them through adversarial inputs and manipulative tactics.

Key Differences Between AI Red Teaming and Traditional Testing

| Aspect | Traditional Security Testing | AI Red Teaming |

| Focus Area | Networks, servers, apps, configurations | AI models, datasets, and decision logic |

| Attack Type | Exploits system vulnerabilities | Exploits model weaknesses and biases |

| Testing Method | Static rule-based and code-focused | Dynamic, data-driven, and adversarial |

| Goal | Identify exploitable system flaws | Evaluate resilience, fairness, and interpretability |

| Outcome | Improved software or infrastructure security | More robust, ethical, and explainable AI models |

Why do Organizations Need AI Red Teaming for Their Systems and Models?

It’s important to know why AI red teaming is vital before getting into the specific benefits. The attack surface gets bigger as more people use AI. Attackers now have new ways to break into systems like data poisoning, prompt injection and model inversion.

Here are some reasons why businesses should make AI Red Teaming a top priority:

1. Find Hidden Weaknesses in Models

Adversarial attacks are when carefully crafted inputs trick AI systems into giving outputs that are unintentional or harmful. Red teaming mimics these things to find weak spots before they are misused.

2. Make AI More Secure and Dependable

Organisations learn how resistant their AI systems are to manipulation, bias exploitation or data tampering by putting them through realistic attack scenarios.

3. Ensure Ethical and Fair AI Behavior

AI red teaming also looks at how fair and unbiased decisions are made. For example, an AI model used for hiring or lending could unintentionally have a bias. Red teaming helps find and fix these kind of problems.

4. Stop Data Poisoning and Model Theft

Through API interactions, attackers can corrupt training datasets or get access to private model information. Red teaming helps businesses place defences against these kinds of attacks.

5. Maintain Regulatory & Compliance Readiness

AI red teaming gives you the testing rigor you need to make sure you are following the rules and being transparent as global standards for AI security keep changing (like the EU AI Act and NIST AI Risk Framework).

In short, AI Red Teaming protects against both fraudulent intentions and unintended results by making sure that AI systems work safely and predictably in the real world.

What Types of Vulnerabilities are Uncovered by AI Red Teaming?

AI red teaming can expose both technical and behavioral flaws which are unique to AI-driven systems. Let’s take a look at them:

1. Adversarial Example Attacks

Small changes to the input data, like changing the pixels in an image, can make models misclassify objects. For example, they might think a “stop sign” is a “yield sign.”

2. Data Poisoning

Attackers can put false data into training datasets, which can make models less accurate or cause negative results.

3. Model Inversion and Data Leakage

Attackers can get sensitive information from the training data by looking at the outputs of models, especially AI models with public APIs.

4. Prompt Injection Attacks (for LLMs)

People can use large language models to ignore system instructions, leak private information, or make harmful content.

5. Bias Exploitation

AI systems might unintentionally show societal biases or bias in the data they use. This can lead to unfair or unethical choices.

6. Model Theft (Extraction)

Adversaries can ask a model the same question over and over to make a replica, which is a breach of intellectual property.

AI red teaming finds these weaknesses to make sure that AI systems stay safe, reliable and able to handle new intelligent threats.

Advantages of Using AI Red Teaming

Let’s look at the benefits of using AI red teaming and how it prove to be a great investment for your company:

1. Enhanced AI Security

Detects and reduces threats unique to machine learning models. It helps to protect intellectual property and sensitive data.

2. Improved Model Robustness

Improves the model performance in distinct and hostile situations.

3. Ethical and Responsible AI Deployment

Makes sure that models act fairly, openly and without bias.

4. Greater Trust from Investors

Shows due diligence to regulators, customers, and investors that your AI systems are properly tested and trustworthy.

5. Continuous Learning and Improvement

Findings from red teaming exercises feed back into retraining cycles. It helps to create a culture of continuous security validation.

AI Red Teaming turns AI governance from compliance necessity into a strategic advantage.

Next Steps

If your business uses AI for decision-making, automation or analytics, you should think about adding AI Red Teaming to your risk management plan.

Here are the steps to get started:

- Find important AI models or LLMs that have an effect on important business results.

- Do baseline AI security checks to find out what are your current weaknesses.

- Hire people who are experts in adversarial machine learning and testing AI models.

- Use the results to improve ongoing model retraining and governance systems.

Companies like CyberNX offer AI Red Teaming services that simulate real-world attacks. These services are useful for organisations that are looking for expertise in offensive AI testing and want to improve their AI security.

Conclusion

AI is changing the way businesses work, but new ideas also come with risks. AI Red Teaming is an important safety measure because it finds the hidden weaknesses in AI systems and machine learning models.

Companies can make sure their AI technologies are safe, ethical, and able to resist both technical and behavioural manipulation by using adversarial testing methods and simulating smart attacks.

In an age of intelligent threats, the best defense is proactive testing.

Blog8 months ago

Blog8 months ago[PPT] Human Reproduction Class 12 Notes

- Blog8 months ago

Contribution of Indian Phycologists (4 Famous Algologist)

- Blog8 months ago

PG TRB Botany Study Material PDF Free Download

Blog8 months ago

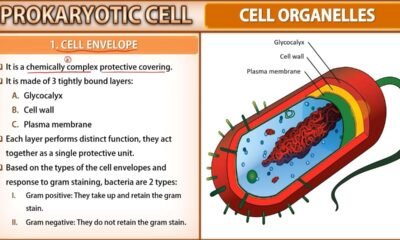

Blog8 months agoCell The Unit of Life Complete Notes | Class 11 & NEET Free Notes

Blog8 months ago

Blog8 months ago[PPT] The living world Class 11 Notes

Blog8 months ago

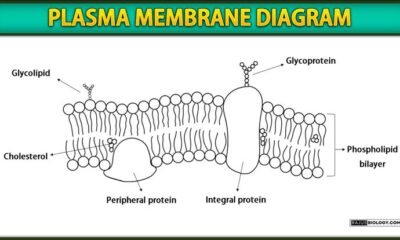

Blog8 months agoPlasma Membrane Structure and Functions | Free Biology Notes

Blog8 months ago

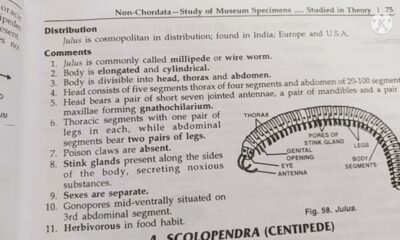

Blog8 months agoJulus General Characteristics | Free Biology Notes

Blog8 months ago

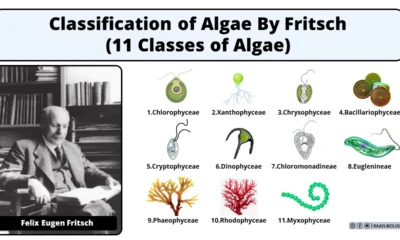

Blog8 months agoClassification of Algae By Fritsch (11 Classes of Algae)